The Brains Behind the Buzz: A Real-Talk Guide to Understanding Neural Networks

Let’s be honest. You hear the terms ‘AI’, ‘machine learning’, and ‘deep learning’ thrown around constantly. They’re in the news, in our apps, and they’re powering the future. But what’s really going on under the hood? The answer, more often than not, is something called neural networks. If you’ve ever felt like this is some impossibly complex, sci-fi concept reserved for PhDs in white lab coats, I’m here to tell you it’s not. It’s fascinating, surprisingly intuitive, and you’re about to get a solid grip on it.

Key Takeaways

- Inspired by the Brain: Neural networks are computing systems vaguely inspired by the biological neural networks that constitute animal brains. They aren’t actual brains, but they learn in a similar way.

- Learning from Data: The core idea is that they learn to perform tasks by considering examples, generally without being programmed with task-specific rules. They find patterns on their own.

- Building Blocks: They are made of interconnected nodes called ‘neurons’ organized in layers. Information flows through these layers, gets processed, and results in an output.

- Everyday Tech: You use neural networks every day, from your phone’s facial recognition to Netflix’s movie recommendations and your email’s spam filter.

What Even *Are* Neural Networks? (A Simple Analogy)

Forget code and complex math for a second. Let’s talk about how a toddler learns to recognize a cat. You don’t sit them down with a biology textbook. You just point and say, “Look, a kitty!”

The first time, the toddler’s brain is just taking in raw data. Pointy ears. Fur. Whiskers. A tail. Meowing sound. Their brain starts to form weak connections between these features and the word “kitty.”

The next day, you see a different cat—this one is orange instead of black. The toddler might be a little confused, but you say, “That’s a kitty, too.” Their brain makes an adjustment. Okay, so color isn’t the most important feature. The pointy ears, whiskers, and tail are more reliable. The connections for those features get a little stronger.

Then they see a small dog. It has fur and a tail. They might guess, “Kitty?” You gently correct them: “No, that’s a puppy.” Their brain gets crucial feedback. The “kitty” connections weaken for that combination of features, and a new “puppy” category starts to form. Over hundreds of examples, their brain becomes incredibly accurate at identifying cats. It’s not magic; it’s pattern recognition through trial and error.

This is almost exactly how neural networks learn. They are pattern-recognition machines. You feed them a massive amount of labeled data (e.g., thousands of pictures, each labeled ‘cat’ or ‘not cat’), and they adjust their internal connections until they can accurately identify the patterns themselves.

The Core Components of Neural Networks (Let’s Get a Bit Technical)

Okay, the analogy is great, but what are the actual parts? Let’s peel back one layer of the onion. The structure of these networks is what makes the learning possible. It all comes down to a few key concepts that work together in a pretty elegant way.

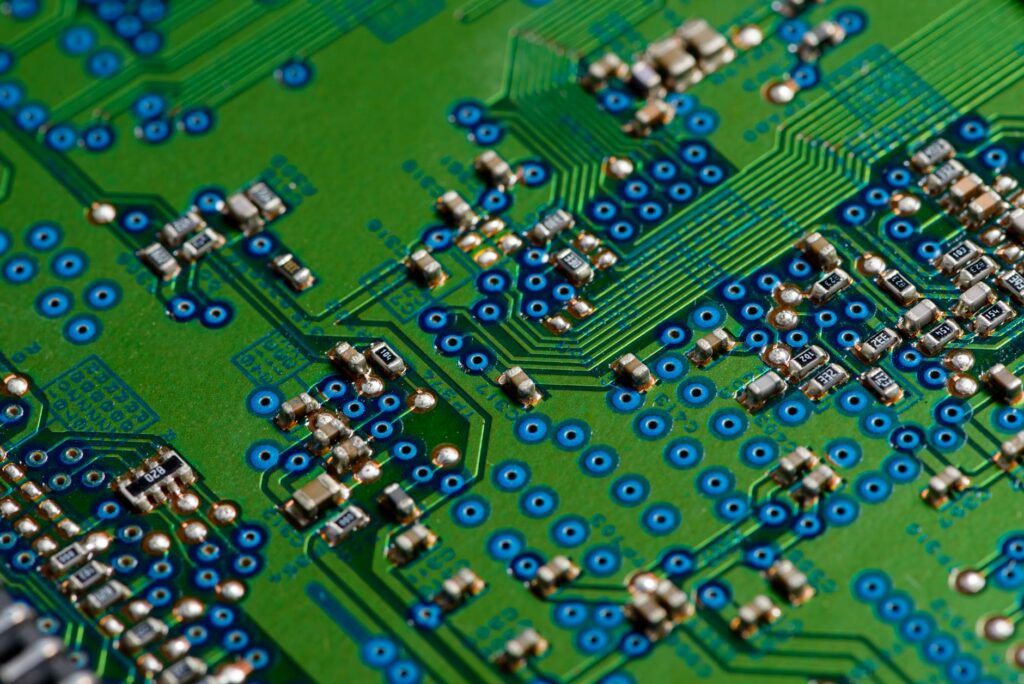

The Building Blocks: Neurons and Layers

Just like the brain has neurons, an artificial neural network has nodes. You can think of each node as a tiny little decision-maker. It receives information, does a quick calculation, and passes its result along.

These nodes aren’t just a jumbled mess; they’re organized into layers:

- The Input Layer: This is the front door. It takes in the raw data for a single example. For our cat picture, each node in the input layer might represent a single pixel’s color and brightness. If the image is 100×100 pixels, you’d have 10,000 input nodes!

- The Hidden Layers: This is where the magic happens. There can be one or many hidden layers (and when there are many, we call it “deep learning”). Each node in a hidden layer receives inputs from the previous layer, combines them, and passes the result to the next layer. Early hidden layers might learn to recognize simple things like edges or corners. Deeper layers might combine those to recognize more complex features like eyes, ears, or whiskers.

- The Output Layer: This is the final result. For our cat identifier, it might be just two nodes: one for ‘cat’ and one for ‘not cat’. The node with the higher value is the network’s prediction.

How Neural Networks Learn (The Magic of “Training”)

So how does the network go from a random guesser to a cat-identifying expert? Through a process called training. This involves two key ideas: a forward pass and backpropagation.

First, you take one piece of data—a picture of a cat—and you pass it through the network from the input layer to the output layer. This is the forward pass. The network, in its initial untrained state, will spit out a random guess. Let’s say it’s 30% sure it’s a cat and 70% sure it’s not a cat. That’s a bad guess.

Now comes the crucial part. We calculate the ‘error’—the difference between the network’s guess (30% cat) and the correct answer (100% cat). This is where backpropagation comes in. It’s a fancy term for a simple idea: working backward from the error and figuring out which connections in the network were most responsible for the mistake. Think of it as a manager tracing a mistake back through a chain of command.

Backpropagation is the process of assigning blame. It tells each tiny connection in the network, “You contributed this much to the final error, so you need to adjust yourself a little bit to do better next time.”

The network then makes tiny adjustments to all its internal ‘weights’ (the strength of the connections between nodes) to reduce that error. You then repeat this process with the next picture. And the next. And the next. You do this hundreds of thousands, or even millions, of times. Each time, the network gets a tiny bit smarter. Its internal weights are slowly tweaked and refined until it consistently gets the right answer.

Weights, Biases, and Activation Functions

Let’s zoom in on a single one of those nodes in a hidden layer. It’s not just passively passing information along. It does three things:

- It receives weighted inputs: Each connection feeding into it has a ‘weight’, which represents its importance. A connection with a high weight has a strong influence on the node’s output. During training, the network is learning the optimal values for all these weights.

- It adds a bias: A bias is like a little thumb on the scale. It’s an extra number that’s added to the weighted sum of inputs. It helps the network make better decisions by allowing it to shift the output up or down, independent of the inputs.

- It applies an activation function: This is the final step. After the node has summed up all its weighted inputs and added the bias, it passes this number through an ‘activation function’. This function basically decides whether the signal from this node is strong enough to be passed on and, if so, how strong that signal should be. It’s like a dimmer switch or a simple on/off switch that prevents signals from either getting too large or fading to nothing as they pass through many layers.

Different Flavors: Types of Neural Networks

Just like you wouldn’t use a hammer to turn a screw, you wouldn’t use the same type of neural network for every problem. They’ve evolved into specialized forms for different tasks.

Feedforward Neural Networks (FNNs)

This is the vanilla ice cream of neural networks. It’s the simplest type, the one we’ve basically been describing so far. Information flows in one direction only: from input, through the hidden layers, to the output. There are no loops. They are great for general classification and regression tasks, like predicting house prices based on features like square footage and number of bedrooms.

Convolutional Neural Networks (CNNs)

These are the visual experts. CNNs are specially designed for processing pixel data. Remember how we said early layers might detect edges and later layers detect eyes or ears? That’s the core idea of a CNN. They use special ‘convolutional’ layers that act like filters, scanning across an image to find specific patterns. This makes them incredibly powerful for:

- Image classification (Is this a cat or a dog?)

- Object detection (Where is the cat in this photo?)

- Facial recognition

- Medical imaging analysis

Recurrent Neural Networks (RNNs)

These are the masters of sequence and memory. Unlike FNNs, RNNs have loops, allowing information to persist. This gives them a form of memory, making them perfect for tasks where context and order matter. Think about it: the meaning of a word in a sentence often depends on the words that came before it. RNNs and their more advanced cousins (like LSTMs and Transformers) are the power behind:

- Natural language processing (Siri, Alexa, Google Translate)

- Speech recognition

- Time-series analysis (predicting stock prices)

- Music generation

Where Do We See This in Real Life?

You’re already using this technology, probably without even realizing it. It’s not just in a lab; it’s in your pocket and in your living room.

- Spam Filtering: Your email service uses a neural network that has been trained on billions of emails to recognize the subtle patterns of spam.

- Recommendation Engines: When Netflix or Spotify suggests something you might like, it’s a network that has analyzed your history and compared it to millions of other users to find patterns in taste.

- Digital Assistants: When you talk to Siri or Google Assistant, RNNs are working to understand the sequence of your words and the intent behind them.

- Photo Tagging: When Facebook or Google Photos automatically suggests tagging a friend in a picture, a CNN has recognized their face.

Conclusion

So, there you have it. Neural networks aren’t a black box of digital magic. They are a powerful, brain-inspired tool for pattern recognition. They’re built from simple components—nodes and layers—that learn by making guesses, measuring their errors, and then making tiny adjustments over and over again. It’s a process of gradual refinement, a digital version of trial and error on a massive scale. The next time your phone unlocks with your face or you get a perfect movie recommendation, you can smile, knowing you understand the incredible engine working behind the scenes.

FAQ

Are neural networks the same thing as Artificial Intelligence (AI)?

Not exactly. Think of it in layers. Artificial Intelligence is the broad, overarching field of creating intelligent machines. Machine Learning is a subfield of AI that focuses on systems that learn from data. Deep Learning is a subfield of Machine Learning that uses very deep neural networks (with many layers). So, neural networks are a specific tool used to achieve AI, but they are not the whole picture.

Is it hard to learn about neural networks?

It’s as deep as you want it to be! Understanding the core concepts, like we’ve discussed here, is very accessible to anyone. You don’t need to be a math genius to grasp the ideas of layers, nodes, and learning from examples. Actually building and training them from scratch requires knowledge of programming (like Python) and some linear algebra and calculus, but there are many tools and frameworks today that make it easier than ever to get started.

AI Tools for Freelancers: Work Smarter, Not Harder in 2024

AI Tools for Freelancers: Work Smarter, Not Harder in 2024  AI and Job Displacement: Your Guide to the Future of Work

AI and Job Displacement: Your Guide to the Future of Work  AI’s Impact: How It’s Transforming Industries Today

AI’s Impact: How It’s Transforming Industries Today  AI in Cybersecurity: The Future of Digital Defense is Here

AI in Cybersecurity: The Future of Digital Defense is Here  AI-Powered Marketing: The Ultimate Guide for Growth (2024)

AI-Powered Marketing: The Ultimate Guide for Growth (2024)  AI in Education: How It’s Shaping Future Learning

AI in Education: How It’s Shaping Future Learning  Backtest Crypto Trading Strategies: A Complete Guide

Backtest Crypto Trading Strategies: A Complete Guide  NFT Standards: A Cross-Chain Guide for Creators & Collectors

NFT Standards: A Cross-Chain Guide for Creators & Collectors  Decentralized Storage: IPFS & Arweave Explained Simply

Decentralized Storage: IPFS & Arweave Explained Simply  How to Calculate Cryptocurrency Taxes: A Simple Guide

How to Calculate Cryptocurrency Taxes: A Simple Guide  Your Guide to Music NFTs & Top Platforms for 2024

Your Guide to Music NFTs & Top Platforms for 2024  TradingView for Crypto: The Ultimate Trader’s Guide

TradingView for Crypto: The Ultimate Trader’s Guide